- There Is No Spoon

- Posts

- OpenAI's 12 Days of Shipmas: No Partridge, Just Processors

OpenAI's 12 Days of Shipmas: No Partridge, Just Processors

ChatGPT's Getting Smarter (but your wallet could get a lot lighter)

AI Edge for Higher Ed

Welcome AI Explorers.

This week: OpenAI is dropping daily surprises in their "12 Days of Shipmas" campaign, while LinkedIn has transformed into an unexpected AI writers' colony. Between new "reasoning" models that promise deeper thinking and video generation that's finally ready for prime time, there's plenty to unpack. Let's cut through the hype and focus on what actually matters for educators. In this edition, you'll find:

A clear-eyed look at OpenAI's new o1 model (and whether that $200 price tag is worth it) 💰🔍

The surprising truth about AI content detection in academia 🎓🔎

A Microsoft privacy concern that might make you rethink your prompts 🔐⚠️

A real tear-jerker on what it means to be human in the age of AI 😢❤️

Time to explore...

Prompt of the Week 💭

Not exactly a prompt, but Ethan Mollick (of course) identifies 15 use cases and 5 misuse cases for generative AI, I've distilled and combined them into the top 7 use and top 3 misuse cases for a sneak peak. Definitely, go read the whole article though.

Use Cases

Generating high volumes of ideas or content

Tasks where you have expertise to verify outputs

Basic summarization and information translation

Getting unstuck on projects

Coding assistance

Getting multiple perspectives or second opinions

Routine data/info entry

Misuse Cases

Learning new concepts (reading and synthesis need human effort). I don't agree with this statement by itself, but do agree that "asking for a summary is not the same as reading for yourself. Asking AI to solve a problem for you is not an effective way to learn." AI can certainly help with clarifying things you are trying to learn and generating problems/challenges/questions to help you learn.

High accuracy is essential (AI hallucinations are hard to spot)

You don't understand AI's limitations (hallucinations and other limitations.)

Bottom Line: AI is most valuable when it amplifies existing expertise or handles routine tasks. For deep learning and high-stakes work, human engagement remains essential.

AI App Spotlight🔍

ChatGPT's o1 model is a so-called "reasoning model". This means that it is best suited for helping solve challenging problems. The way that it does this is by "thinking" for longer by creating multiple responses and internally deliberating and refining responses before presenting them to the user.

Comparison Table: OpenAI's o1 vs. GPT-4o

Feature/Aspect | OpenAI o1 | GPT-4o |

|---|---|---|

Model Focus | Advanced reasoning and "chain of thought" tasks | General-purpose language understanding and generation |

Primary Strength | Tackling complex problem-solving scenarios requiring multi-step reasoning | Versatile across a broad spectrum of tasks including text generation, summarization, and chat interactions |

Best Use Cases | - solving intricate engineering or scientific challenges | - Writing and content generation |

Reasoning Methodology | "Chain of thought" with internal deliberation for better refinement of responses | Predictive modeling optimized for natural and coherent text generation |

Computation Efficiency | Higher computational intensity due to internal deliberation | More computationally efficient for general tasks |

Adaptability | Best suited for specialized and high-complexity scenarios | Broadly adaptable to various domains and less specialized tasks |

Accessibility | Positioned as a premium model for professional and enterprise use cases | Designed for general users and businesses |

For some more insights into use cases for o1 include coding and research (see videos here).

Free users of ChatGPT currently do not have access to o1 and even ChatGPT plus users have only limited access (50 o1 messages per week)

To be honest, I have not found a use for the o1 model for myself. I currently don't do a lot of coding or research. I am also not yet sold on the idea of entirely passing off important work to an AI where high accuracy is essential (i.e., in research and coding). I do plan to investigate a bit more in using it to help develop a new course. I'll play around a bit with creating a course activity plan and lesson plans based on a learner profile and learning outcomes. I will let you know how it goes.

But wait, there's more. Along with the recent release of o1 came ChatGPTPro and access to o1-Pro. o1-Pro is an even more advanced reasoning model that "thinks" harder than o1 and gives supposedly better results. Here is a nice comparison between o1 and o1 pro with some actual testing.

There is one big downside to o1-pro. To get access costs $200US/month. I guess I'm out and won't be able to give you my first hand impressions until that price comes down a bit.

Whether o1's enhanced reasoning capabilities justify its premium pricing will likely depend on your specific needs – but for most users, GPT-4's versatility remains the practical choice.

AI News of the Week 📰

A wonderful, whimsical, creative piece on what it means to be human in the age of generative AI. Okay, who's chopping onions?

Ottawa is putting its money where its neural networks are - announcing up to $5M in funding for BC organizations ready to commercialize or adopt AI solutions, with a focus on improving human health, environmental sustainability, and economic resilience. This $32M fund from Pacific Economic Development Canada wil support businesses and non-profits in commercializing or adopting AI solutions in BC.

LinkedIn has become an AI writer's paradise, with over half of longer posts now AI generated. Even robots have learned how to humblebrag about their career achievements.

Connect Claude to Google maps, your local file system, browsers, your own databases plus more. Claude Model Context Protocol (MCP) is an open protocol that enables integration between a large language model and external data and tools. Using it currently requires Claude Desktop version (free) and a bit of technical know how, but look for further integration with web apps and Claude LLM.

An easily accessible explanation of LLMs. I may have shared this before, but it's worth sharing again

12 Days of Shipmas from OpenAI

The 12 days of Shipmas is a clever marketing ploy to get people excited and talking (even more) about OpenAI, ChatGPT, and generative AI. So far (as of December 9, 2024) we have had 3 days (weekends don't count).

Day 1: o1 reasoning large language model (see the AI App Spotlight of this newsletter). Along with a $200/month ChatGPTPro version

Day 2. Reinforcement Fine-Tuning Research Program. Yawn, I didn't even read this one, not sure what it's about.

Day 3. Sora! This is OpenAI's long promised video generation model (first announced Feb. 15, 2024). It is now available to users in most of the world. ChatGPT Plus subscribers will be able to do 50 video generations per month. $200 ChatGPT Pro subscribers will be able to do unlimited generations.

9 more days to go

AI Powered Pedagogy 👩🏫

Large Language Model providers may be using your prompts for training future iterations of the LLM, but even worse, there may be clauses in the terms of use that allow humans (at Microsoft) to read your prompts.

I hate finding out that I fit into a particular mould, but Daniel Stanford has my take on AI in Education nailed with this "AI Festivus" article...read the part about the talking points in a typical "AI in Education" presentation

An interesting, library-led, AI project. "A Mellon-funded project to develop an ethical, public-interest way to incorporate books into artificial intelligence."

AI Detection: A Literature Review slide deck by Chris Ostro and Brad Grabham at University of Colorado Boulder. Watch the actual presentation here

Upcoming Events

These upcoming events caught my eye. I can't vouch for their quality, but they touch on relevant themes and certainly might be worth checking out.

Workshops

1 Hour to AI proficiency. Dec 10. 9-10 am. This one will be a bit of a promotion for further (paid) training, but I have found the free workshops from this provider to be useful but more business focused.

Build No-Code AI-powered Apps for the Classroom. January 15. 12-1 pm. Experiments and projects with open source, AI-powered "micro-apps". See also https://appsforeducation.ai

Conferences

AI-Cademy. Canada Summit for Post-Secondary Educators. March 6-7 in Calgary

The Teaching Professor Conference on AI in Education. December 2-4 2024 (sessions available On-demand access still available until Feb 17 2025)

The AI Show and Summit From ASU (Arizona State University) and GSV (Global Silicon Valley) Ventures. April 5-7 (for Expo) and April 6-9 (for Summit). In San Diego

The mAIn Event

They, Who Shall Not Be Named

For about a week, ChatGPT users across the internet were complaining that ChatGPT would not say the name "David Mayer", no matter what they did. I thought this was just a silly internet meme with little evidence behind it. Then I came upon this Ars Technica article that said that there are actually several names that ChatGPT blocks.

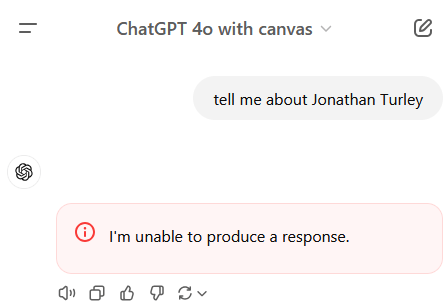

Ars Technica is a reputable, technology-focused publication, but I still didn't fully believe. They were saying that if your name is Brian Hood, Jonathan Turley, Jonathan Zittrain, David Faber, or Guido Scorza, ChatGPT would not talk about you. It does seem a little far fetched, so I had to try it out myself. I first started with simple requests: "tell me about Jonathan Turley" and got back "I'm unable to produce a response".

Even when I stepped it up a bit and tried tricking ChatGPT, it still wouldn't say the names

According to the Ars Technica article, this is not a random phenomenon - it seems to be due to lawsuits or controversies over misinformation provided by ChatGPT about these people. It seems to me that blocking certain names like this might mitigate some short-term legal risks that OpenAI has, but I don't see how they can keep a growing list of blocked names. And what are they going to block next? OpenAI and other AI companies certainly face many challenges regarding accuracy and fairness, but I hope that seemingly arbitrary censorship in AI systems does not become the go-to solution.

Glossary of Terms

Reasoning model

A type of AI model designed to break down complex problems into smaller steps, similar to human problem-solving. OpenAI's o1 is an example, taking more time to "think through" responses before answering.

Neural networks

Computer systems inspired by human brain structure that can learn from examples.

Hallucinations

When AI models generate false or misleading information that sounds plausible but isn't factual.

LLM (Large Language Model)

AI systems trained on vast amounts of text data to understand and generate human-like language.

Model Context Protocol (MCP)

A protocol that enables integration between large language models and external data and tools, allowing Claude to connect with systems like Google Maps and local file systems.

Reply